There are plenty of ways to manipulate photos to make you look better, remove red-eye or lens flare and so on. But so far the blink has proven a tenacious opponent of good snapshots. That may change with research from Facebook that replaces closed eyes with open ones in a remarkably convincing manner.

It’s far from the only example of intelligent “in-painting,” as the technique is called when a program fills in a space with what it thinks belongs there. Adobe in particular has made good use of it with its “context-aware fill,” allowing users to seamlessly replace undesired features, for example a protruding branch or a cloud, with a pretty good guess at what would be there if it weren’t.

But some features are beyond the tools’ capacity to replace, one of which is eyes. Their detailed and highly variable nature make it particularly difficult for a system to change or create them realistically.

Facebook, which probably has more pictures of people blinking than any other entity in history, decided to take a crack at this problem.

It does so with a Generative Adversarial Network, essentially a machine learning system that tries to fool itself into thinking its creations are real. In a GAN, one part of the system learns to recognize, say, faces, and another part of the system repeatedly creates images that, based on feedback from the recognition part, gradually grow in realism.

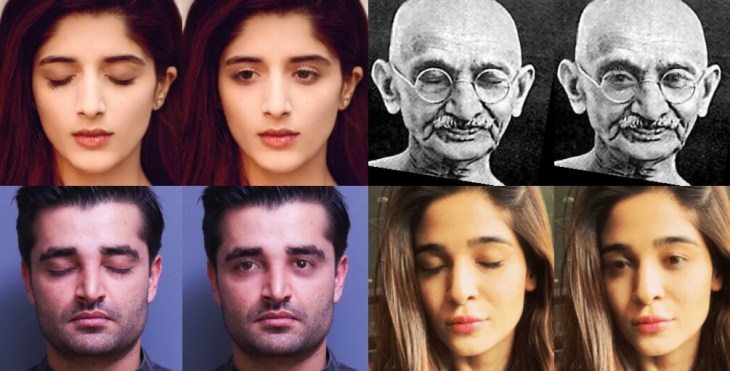

From left to right: “Exemplar” images, source images, Photoshop’s eye-opening algorithm and Facebook’s method.

In this case the network is trained to both recognize and replicate convincing open eyes. This could be done already, but as you can see in the examples at right, existing methods left something to be desired. They seem to paste in the eyes of the people without much consideration for consistency with the rest of the image.

Machines are naive that way: they have no intuitive understanding that opening one’s eyes does not also change the color of the skin around them. (For that matter, they have no intuitive understanding of eyes, color or anything at all.)

What Facebook’s researchers did was to include “exemplar” data showing the target person with their eyes open, from which the GAN learns not just what eyes should go on the person, but how the eyes of this particular person are shaped, colored and so on.

The results are quite realistic: there’s no color mismatch or obvious stitching because the recognition part of the network knows that that’s not how the person looks.

In testing, people mistook the fake eyes-opened photos for real ones, or said they couldn’t be sure which was which, more than half the time. And unless I knew a photo was definitely tampered with, I probably wouldn’t notice if I was scrolling past it in my newsfeed. Gandhi looks a little weird, though.

It still fails in some situations, creating weird artifacts if a person’s eye is partially covered by a lock of hair, or sometimes failing to recreate the color correctly. But those are fixable problems.

You can imagine the usefulness of an automatic eye-opening utility on Facebook that checks a person’s other photos and uses them as reference to replace a blink in the latest one. It would be a little creepy, but that’s pretty standard for Facebook, and at least it might save a group photo or two.